So confident is the company, it says conversational speech recognition is now as accurate as professional human transcribers. Microsoft Artificial Intelligence and Research says its system can achieve its accuracy without some human-related benefits such as listening to text several times. Last year and this year, Microsoft tested conversational speech recognition by studying recordings from the Switchboard corpus. This is a collections of around 2,400 telephone conversations carried out by researchers in the 1990s. Microsoft AI and Research wanted to match the accuracy of human transcribers. The humans were given the chance to listen to recordings more than once, a luxury not afforded to the recognition system. Compared to last year, Microsoft says researchers reduced the error rate by about 12 percent. This was achieved by enhancing the neural net-based acoustic and language models of the system. The software can now listen to entire conversations and adapt its results accordingly.

Improvement

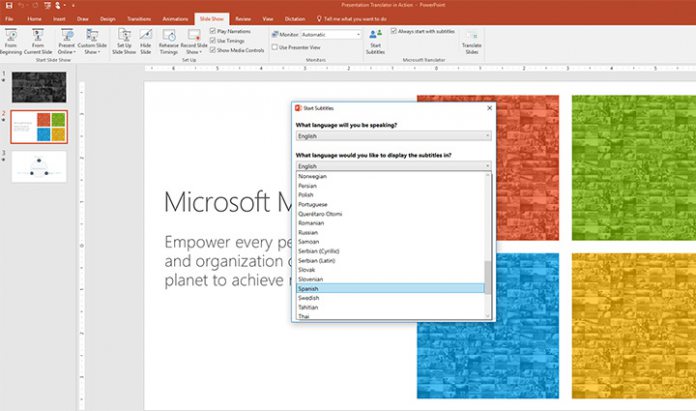

Contextual and prediction improvements allow the system to judge which words and phrases are likely to come next. This improves the machine learning capabilities as it mimics how humans conduct conversations and predict word flow: “Reaching human parity with an accuracy on par with humans has been a research goal for the last 25 years,” the company says. “Microsoft’s willingness to invest in long-term research is now paying dividends for our customers in products and services such as Cortana, Presentation Translator, and Microsoft Cognitive Services. It’s deeply gratifying to our research teams to see our work used by millions of people each day.”